I had setup Kubernetes on local laptop and had tried a few things 2-3 years back. In the meantime the laptop got replaced and recently got some work around it. So it was time to set it up again.

I found that Docker Desktop does now support Kubernetes so thought it will be pretty easy to get up back again. So went to Docker Desktop -> Settings -> Kubernetes and selected Enable Kubernetes. In a few minutes it got enabled and on the command prompt I got kubectl. Wow, its all done.

Now the problems started.

First, when I executed kubectl version, I did get the client version but for the server version I got:

kubectl unable to connect to server: x509: certificate signed by unknown authority

So spent quite a few hours trying to work this out. There is enough documentation on the web but nothing specific to docker desktop. So despite the trials and errors, it did not work so abandoned it and went back to my earlier trusty minikube.

First had to remove Kubernetes from Docker Desktop but it was not allowing me to do it !!. So had to uninstall the whole of Docker Desktop.

Then installed the following using Chocolatey:

- Install kubectl : choco install kubernetes-cli

- Install Minikube : choco install minikube

Then installed Docker Desktop as well as had uninstalled it earlier.

All done, lets check all things are fine:

- Start the cluster : minikube start

- Check kubectl : kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.0", GitCommit:"cb303e613a121a29364f75cc67d3d580833a7479", GitTreeState:"clean", BuildDate:"2021-04-08T16:31:21Z", GoVersion:"go1.16.1", Compiler:"gc", Platform:"windows/amd64"}

Server Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.2", GitCommit:"faecb196815e248d3ecfb03c680a4507229c2a56", GitTreeState:"clean", BuildDate:"2021-01-13T13:20:00Z", GoVersion:"go1.15.5", Compiler:"gc", Platform:"linux/amd64"}

- Check cluster : kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:61152

KubeDNS is running at https://127.0.0.1:61152/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

- All good, lets start the dashboard : minikube dashboard

* Enabling dashboard ...

- Using image kubernetesui/dashboard:v2.1.0

- Using image kubernetesui/metrics-scraper:v1.0.4

* Verifying dashboard health ...

* Launching proxy ...

* Verifying proxy health ...

* Opening http://127.0.0.1:60036/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/ in your default browser...

Open the browser and view the brand new Kubernetes cluster in all its glory :)

So we have our K8 cluster setup. Lets start some sample applications just to test it out and revise some of the old stuff. So I tried to setup nginx as will be working on application which will have a HTTP interface.

So first create the nginx.yml file for the deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:latest

name: nginx

and apply it on the K8 cluster :

> kubectl apply -f nginx.yml

deployment.apps/nginx created

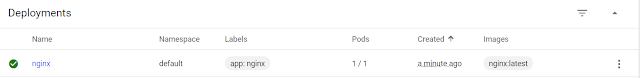

Go to the MiniKube dashboard and under deployments, you will find:

Under Pods, you will find:

The equivalent command line will be - kubectl get deployments

- kubectl get pods

So all good, we have deployed nginx which is running in a container within pod. Still we need to expose nginx on a port in the container so that it is accessible. Update the yaml file to:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:latest

name: nginx

ports:

- containerPort: 80

name: nginx

and apply it : kubectl apply -f nginx.yml

So now we have set port 80 where it will receive the request and forward it to nginx. Still this port is exposed within the K8 cluster and not to outer world. To expose it to outer world or host, we need to create a service which will do so.

So create the nginx_service.yml as:

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- protocol: TCP

targetPort: 80

port: 8082

and apply it :

>kubectl apply -f nginx_service.yml

service/nginx created

In the minikube dashboard, you will find:

As you can well see that it was exposed the port 8082 for the app: nginx

All good. Now get will app development and deployment on K8 cluster :)